Introduction

WebSocket servers maintain persistent client connections, but when scaled horizontally, each instance handles its own isolated connections. This creates a challenge: messages can't reach clients connected to other instances:

❗ Messages sent from Instance A will not reach clients connected to Instance B

Let's try to solves that problem using Redis Pub/Sub, which allows different server instances to communicate and broadcast messages across all connected clients, regardless of which server they are connected to.

1. Why Scale WebSockets with Redis?

- WebSocket servers maintain many persistent connections, which are stateful and demand efficient scaling.

- Running multiple WebSocket server instances is necessary for high volume but requires a mechanism to broadcast messages among all connected clients across instances

- Redis pub/sub serves as a central messaging hub—each server subscribes to channels and publishes outbound messages. Messages propagate to all servers instantly, keeping clients in sync.

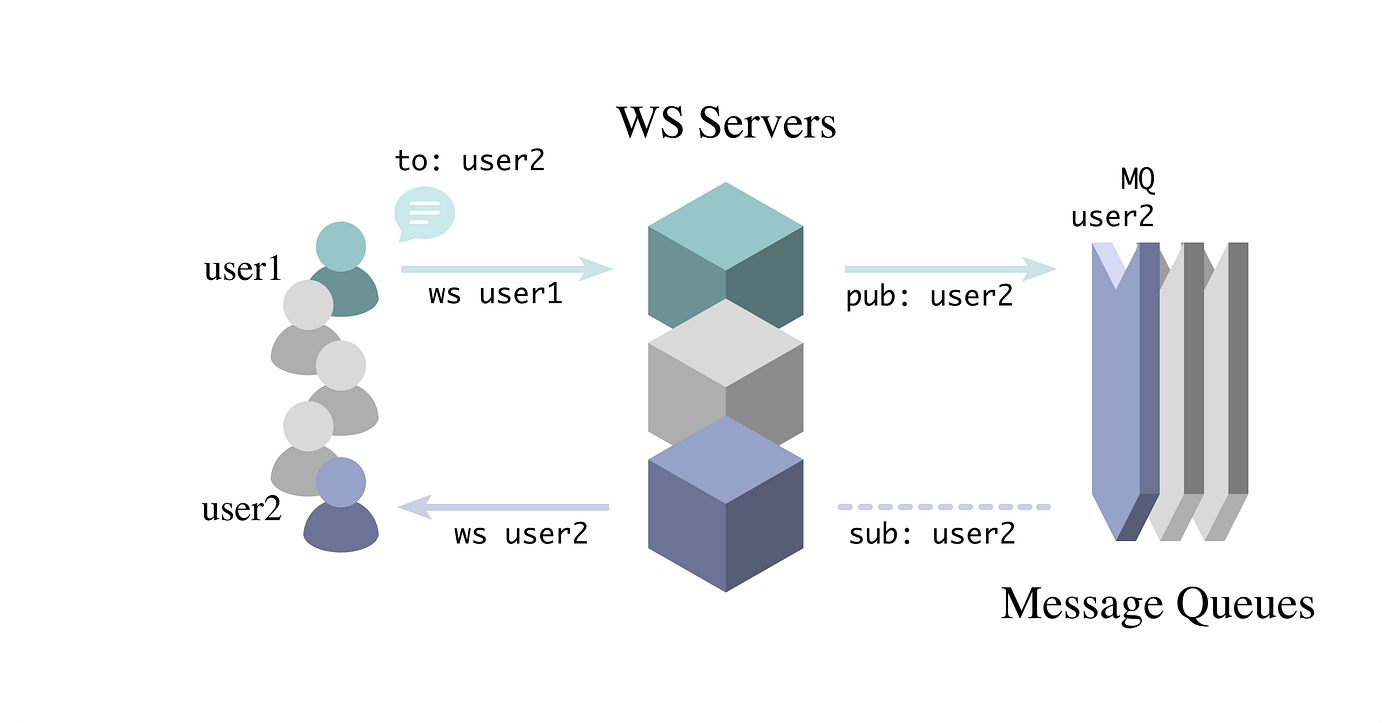

2. Architecture Overview

Components:

- Multiple Go WebSocket server instances, each handling client connections

- A shared Redis server or cluster for

pub/submessaging - A load balancer (e.g. NGINX or HAProxy) routing websocket traffic across instances

Message Flow:

- Client connects via load balancer to one of the WebSocket servers.

- WebSocket server subscribes to relevant Redis channels (e.g. "broadcast", user‑specific topics).

- When a server instance receives a message (from a client or other service), it publishes it to Redis.

- Then redis broadcasts message to all subscribers—each server receives and forwards it to its connected clients.

This decouples servers and scales horizontally by simply adding more instances, all sharing the same Redis pub/sub backbone.

3. Redis Pub/Sub: Why It Works

Redis pub/sub is simple, fast, and lightweight, making it ideal for real-time applications:

- One-to-many broadcasting: A single

PUBLISHsends the message to all subscribers. - Low latency: Redis is optimized for speed and handles millions of messages per second.

- Decoupled architecture: Servers don't need to know about each other.

However,

pub/subdoes not persist messages, so if a server goes down, it might miss a message unless you add persistence via Redis Streams or other layers.

4. Instance Scaling

Using Redis pub/sub allows the WebSocket layer to scale horizontally:

- Add more server instances to support more concurrent users.

- Stateless WebSocket servers (aside from active connections) make deployment and scaling straightforward.

- Shared Redis ensures consistent message delivery across the cluster.

For production-grade systems:

- Use Redis Sentinel or Redis Cluster for high availability.

- Consider message acknowledgment or persistence (e.g., Redis Streams or Kafka) for guaranteed delivery.

- Monitor Redis memory usage and network traffic, especially with large fan-out broadcasts.

5. Use Cases

This Redis-based WebSocket scaling pattern is ideal for:

- Chat apps (group or global messaging)

- Live dashboards or analytics feeds

- Sports scores or stock tickers

Final Thoughts

Scaling WebSocket servers with Redis pub/sub is a powerful and lightweight solution for real-time systems. It enables:

- Simple horizontal scaling

- Loose coupling of server instances

- Instant message distribution

Whether you're building chat apps, real-time dashboards, or collaborative tools, Redis provides the glue that connects all the parts—ensuring all users stay in sync across any number of servers.